Debouncing in React Router v7

While hanging out in the Remix discord, I happened to see Bryan Ross(@rossipedia on discord and @rossipedia.com on bsky) share the technique I'm going to cover below. All credit for this concept should go to him. Clever guy, that one!

Prefer video? Check out the video version of this on YouTube.

Sometimes you have a user interaction that can trigger multiple requests in a very fast sequence and you only care about the latest one. For example, you might have a table of records and want to provide an input for the user to filter the data but that filtering needs to occur in the backend. Every keystroke would initiate new requests to fetch the filtered data.

export async function loader({request}: Route.LoaderArgs) {

const url = new URL(request.url)

const q = url.searchParams.get('q')

return {

contacts: await fetchContacts(q)

}

}

function Contacts({loaderData}: Route.ComponentProps) {

const submit = useSubmit()

return (

<div>

<Form onChange={e => submit(e.currentTarget)}>

<input name="q" />

</Form>

<ContactsTable data={loaderData.contacts} />

</div>

)

}

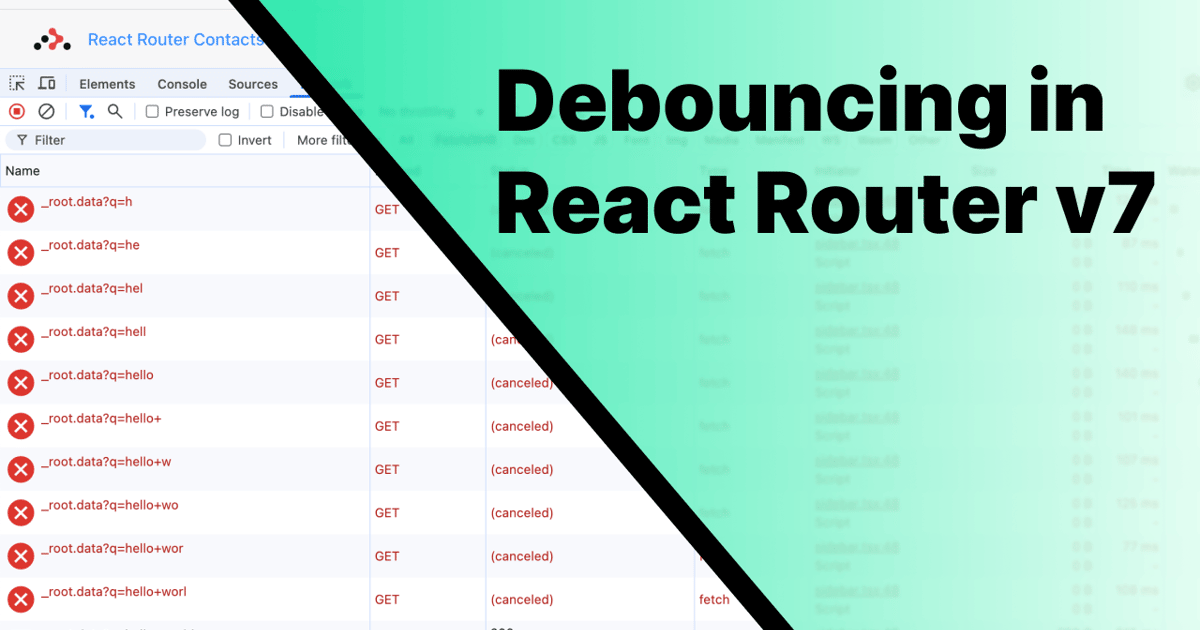

You would see something like this as the user typed "Dallas".

GET ...?q=D

GET ...?q=Da

GET ...?q=Dal

GET ...?q=Dall

GET ...?q=Dalla

GET ...?q=Dallas

React Router will cancel all but the latest in-flight requests so you don't have to manage any race conditions yourself which is awesome. Buuuut, this is kind of only half the story. Those cancelled requests are only cancelled from the frontend's perspective. They were still sent and will still trigger your loader(or action, depending on form method) which is a waste of your backend resources and could be unnecessarily stressing your system.

It would be much better to introduce a slight delay so we can wait for the user to stop typing and then initiate the request to fetch new data. This delaying before doing something is known as "debouncing".

useEffect?

You might be tempted to maintain, and debounce, a state value and reach for useEffect to initiate requests.

function Contacts({loaderData}: Route.ComponentProps) {

const [filter, setFilter] = useState<string>();

const debouncedFilter = useDebounce(filter, 500);

const submit = useSubmit()

useEffect(() => {

if (typeof debouncedFilter === 'string') {

submit({q: debouncedFilter})

}

}, [submit, debouncedFilter])

return (

<div>

<Form>

<input name="q" onChange={(e) => setFilter(e.target.value)} />

</Form>

<ContactsTable data={loaderData} />

</div>

);

}

We've introduced four hooks and one being the dreaded useEffect for data fetching which is a react cardinal sin. I fear I've just sent a shiver up David Khourshid's spine by merely mentioning this foul possibility. There must be a better way!

clientLoader to the rescue

With React Router, we can take advantage of the fact that it uses the web platform with AbortSignals and Request objects. We can do that in the clientLoader and clientAction functions

If defined, these will be called in the browser instead of your loader and action function. They can act as a gate where you determine whether the request should make it through to their server counterparts.

Let's introduce a clientLoader in our code.

// this runs on the server

export async function loader({request}: Route.LoaderArgs) {

const url = new URL(request.url)

const q = url.searchParams.get('q')

return {

contacts: await fetchContacts(q)

}

}

// this runs in the browser

export async function clientLoader({

request,

serverLoader,

}: Route.ClientLoaderArgs) {

// intercept the request here and conditionally call serverLoader()

return await serverLoader(); // <-- this initiates the request to your loader()

}

function Contacts({loaderData}: Route.ComponentProps) {

const submit = useSubmit()

return (

<div>

<Form onChange={e => submit(e.currentTarget)}>

<input name="q" />

</Form>

<ContactsTable data={loaderData.contacts} />

</div>

)

}

Now we just need some logic in the clientLoader to determine whether serverLoader should get called. Since React Router uses AbortSignals, we can write a new function that uses those. It's going to return a Promise that resolves after a specified amount of time unless we detect that the request was cancelled(the signal was aborted).

// ...

function requestNotCancelled(request: Request, ms: number) {

const { signal } = request

return new Promise((resolve, reject) => {

// If the signal is aborted by the time it reaches this, reject

if (signal.aborted) {

reject(signal.reason);

return;

}

// Schedule the resolve function to be called in

// the future a certain number of milliseconds

const timeoutId = setTimeout(resolve, ms);

// Listen for the abort event. If it fires, reject

signal.addEventListener(

"abort",

() => {

clearTimeout(timeoutId);

reject(signal.reason);

},

{ once: true },

);

});

}

export async function clientLoader({

request,

serverLoader,

}: Route.ClientLoaderArgs) {

await requestNotCancelled(request, 400);

// If we make it passed here, the promise from requestNotCancelled

// wasn't rejected. It's been 400 ms and the request has

// not been aborted. Proceed to initiate a request

// to the loader.

return await serverLoader();

}

// ...

We're now debouncing the requests to the loader based on the request signal and we don't have to muddy up our UI component with a bunch of event listeners and hooks. 🎉

Bryan's implementation had a different name and slightly different api.

export async function clientLoader({

request,

serverLoader,

}: Route.ClientLoaderArgs) {

await abortableTimeout(400, request.signal);

return await serverLoader();

}

You get the idea though so you can run with the concept however you like.

If you like this type of stuff, you can drop your email in the box below and I'll shoot you an email whenever I drop more posts and videos. ✌️

Categories: Remix, React Router, Javascript